AI And The Energy Equation

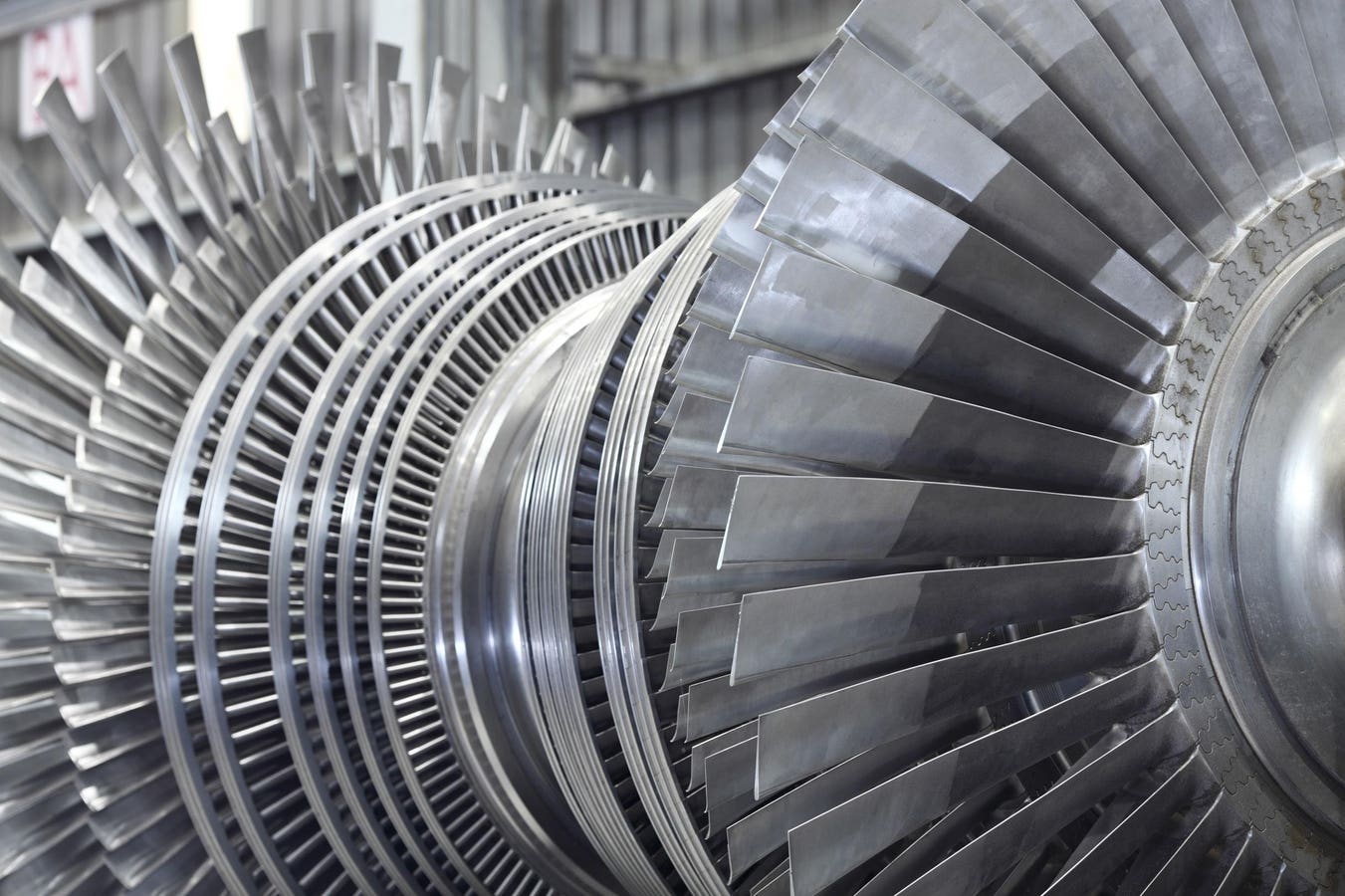

Share to FacebookShare to TwitterShare to LinkedinInternal rotor of a steam Turbine at workshop

getty As the community of IT leaders and experts ponders the most likely future of technology, in which we outsource more and more cognition to AI, people are also thinking about energy and power.

Had you asked me a few years ago, I wouldn’t have thought that this particular aspect of IT would be so front and center today. It’s also sort of strange to enumerate, because of the way that it contrasts to our human brains. As people, we get our fuel in strange and inefficient ways. By contrast, we know how to power computers – we know their energy needs down to the last detail. Or do we?

This was brought back to me in a thought-provoking talk by Vijay Gadepally, where he went into some of the considerations for conserving energy while interacting with Chatbot and AI agents or using data centers to process gigantic amounts of information.

Again, it’s important that we think about this, and it’s interesting how nuanced AI‘s power needs can be…

Five Commandments for Energy Savings Throughout the presentation, Gadepally returned to five principles for conserving energy in AI operations. I included all five slides, because these are a good way to see visually a lot of the detail behind each of these points. But the main strategies are as follows – know the impact of what AI is doing, provide power on an as-needed basis, reduce computing budget by optimizing, consider using smaller models or ensemble learning, and make the underlying systems more sustainable.

MORE FOR YOUApple Warns Users Of iPhone Spyware Attacks—What You Need To KnowGoogle’s Gmail Upgrade—Why You Need A New Email Address In 2025Trump Says U.S. Should Take Ownership Of Greenland And Threatens To Takeover Panama Canal Measuring Power Needs First, Gadepally suggests we have to start with a good reckoning of how much power we’re using. If we have transparency into the energy cost of a ChatGPT query, we can then consider risk versus reward (or cost versus gain) and what we need to focus on in daily operations. For example, scientists have figured out that asking ChatGPT a series of questions generally requires about a 16 ounce bottle of water, and as our experts have pointed out, this has to be drinking-quality water in order to support the infrastructure. So it’s literally taking portable water out of people’s mouths.

That’s to say nothing of the actual energy budget that these technologies have, where a lot of our electricity is still made through burning fossil fuels.

This leads us to some of Gadepally’s other points about lowering the energy footprint of AI operations.

The Benefits of Optimization First, he suggests we should focus just on particular problems that are more important, to reduce the computing budget. We shouldn’t just let these systems run in order to see what they can do while the energy costs mount up.

Here’s a very interesting example that Gadepally points out – it’s about inference, which he refers to as an “energy hog.”

Do computers use more power when they think harder? The short answer is yes.

Right now, inference is all the rage as we marvel at the ability of the LLMs to buckle down and concentrate on a particular question or idea. But yes, that type of cognition uses a particular level of energy, and may only be needed for certain higher-level workloads.

Then there’s Gadepally’s suggestion that we can use smaller models for some jobs. This sort of triangulation, which he refers to as “telemetry,” breaks down the energy needs into components that we can manage, to, as he says, “reduce Capex and Opex”. (You’ll have to forgive the corporate-speak)

Finally, there’s the recommendation to build systems to be more sustainable. One of the biggest examples is locating the energy sources with the data centers on a particular piece of land, so that you’re not losing energy through transmission.

Another overarching strategy (I don’t think Gadepally talked about this specifically, but it’s been on my mind) is to ramp up safe nuclear energy production. This is easier said than done if you look at examples like Chernobyl and Three Mile Island, but at the same time, it’s reasonable to hope that nuclear energy safety has made great strides since then. The U.S. is looking, for example, at China’s successful use of small nuclear facilities to generate electricity without fossil fuel combustion.

The bottom line, though, is that we’re going to need an ‘any and all’ approach, which is one reason I found Gadepally’s talk so appealing. Whether it’s his example of allowing children to generate bedtime stories, or monitoring the use of drones in industries like defense and transportation, we’ll need to be thinking about how to manage the energy costs as we go.

Follow me on LinkedIn. Check out my website.

Bitcoin

Bitcoin  Ethereum

Ethereum  Tether

Tether  XRP

XRP  USDC

USDC  Solana

Solana  TRON

TRON  Dogecoin

Dogecoin  Lido Staked Ether

Lido Staked Ether